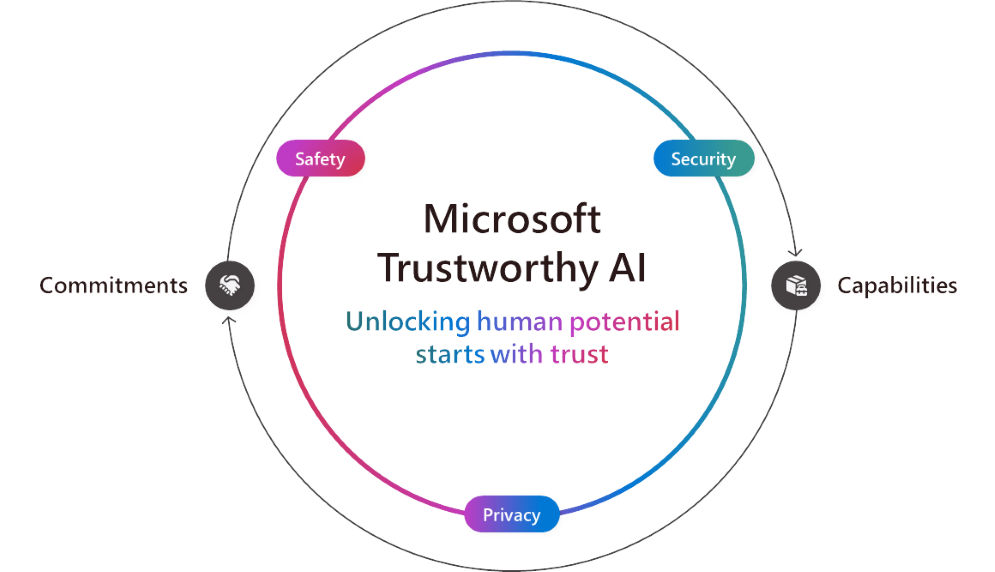

As AI continues to evolve, everyone plays a role in ensuring it creates a positive impact for organizations and communities worldwide. At Microsoft, we are committed to helping customers build and use AI that is secure, safe, and private—AI that can be trusted.

Our commitment to Trustworthy AI is backed by industry-leading technology and practices. We focus on safeguarding users at every level, ensuring both developers and customers are protected. Today, we’re excited to announce new product capabilities aimed at enhancing the security, safety, and privacy of AI systems.

Strengthening Security with New Capabilities

At Microsoft, security remains our top priority, reinforced by our expanded Secure Future Initiative (SFI). This initiative embodies our commitment to making customers more secure. This week, we released our first SFI Progress Report, detailing updates in culture, governance, technology, and operations. This report follows three core principles: secure by design, secure by default, and secure operations.

In addition to our well-established Microsoft Defender and Purview offerings, our AI services provide essential security controls, such as preventing prompt injections and copyright violations. Building on this, we’re introducing two new features:

Azure AI Studio Evaluations: These assessments help support proactive risk evaluation.

Microsoft 365 Copilot Transparency: Soon, Microsoft 365 Copilot will offer insights into web queries, empowering admins and users to understand how web searches enhance Copilot responses.

These security features are already making an impact. Cummins, a global leader in engine manufacturing and clean energy technologies, uses Microsoft Purview to strengthen their data security by automating data classification and labeling. EPAM Systems, a software engineering firm, deployed Microsoft 365 Copilot, noting the confidence they gained from Microsoft’s data protection policies.

Driving Safety Through Responsible AI

In 2018, Microsoft established its Responsible AI principles, which continue to guide how we build and deploy AI safely. These principles help us avoid harmful content, bias, and unintended risks, all while ensuring the AI systems we build are robust and secure. Over time, we’ve made significant investments in governance, tools, and processes to uphold these values, and we are committed to sharing our best practices with customers.

Today, we’re unveiling several new safety-focused capabilities:

Groundedness Detection Correction: In real time, this feature corrects hallucination issues in Azure AI Content Safety.

Embedded Content Safety: This feature allows customers to integrate Azure AI Content Safety directly into devices, ensuring safety even when cloud connectivity is unavailable.

Output Quality Assessments: Azure AI Studio now supports evaluations of output quality and the frequency of protected material in AI applications.

Protected Material Detection for Code: Now in preview, this feature detects pre-existing content in public source code, fostering collaboration while ensuring transparency.

Customers are already leveraging these features to build secure AI applications. For instance, Unity, a 3D game development platform, used Azure OpenAI Service to create Muse Chat, an AI assistant that uses content filters for responsible use. Similarly, ASOS, a UK-based fashion retailer, employs Azure AI Content Safety to deliver a top-notch AI-powered customer experience.

Elevating Privacy in AI Systems

Data is foundational to AI, and protecting customer data is at the core of Microsoft’s privacy principles. Today, we are expanding on these principles with new privacy-enhancing capabilities:

Confidential Inferencing in Azure OpenAI Whisper: This new feature, currently in preview, enables generative AI applications to maintain verifiable end-to-end privacy during the inferencing process—particularly critical for industries like healthcare, finance, and retail.

Azure Confidential VMs with NVIDIA H100 Tensor Core GPUs: These are now generally available, offering customers the ability to secure data directly on the GPU, ensuring privacy during AI computations.

Azure OpenAI Data Zones for the EU and U.S.: Coming soon, this functionality will give customers control over data processing and storage for generative AI applications, helping ensure compliance with regional regulations.

Customers are already excited about these innovations. F5, an application security provider, is using Azure Confidential VMs to develop AI-powered security solutions while safeguarding data privacy. Meanwhile, Royal Bank of Canada (RBC) has integrated Azure confidential computing into their platform, using the advanced AI tools to maintain data privacy while enhancing their AI models.

Trustworthy AI: Achieving More Together

AI built on trust can unlock extraordinary potential, transforming business processes, enhancing customer engagement, and improving everyday life. Microsoft’s continued focus on advancing security, safety, and privacy enables our customers to develop and use AI in ways that benefit everyone. Trustworthy AI is central to our mission as we strive to expand opportunity, protect rights, and promote sustainability in all we do. Together, we can achieve more with AI that people can trust.